AI facial recognition led to 8-month pregnant woman’s wrongful carjacking arrest in front of kids: lawsuit

Artificial intelligence-powered facial recognition wrongfully pinpointed an eight-month pregnant woman for a violent carjacking, according to a lawsuit.

Six police officers swarmed Porcha Woodruff’s Detroit home before 8 a.m. one morning in February while she was getting her 12- and 6-year-old kids ready for school, the federal lawsuit says.

“I have a warrant for your arrest, step outside,” one of the officers told Woodruff, who initially thought it was a joke, according to the lawsuit.

Officers told her she was being arrested for robbery and carjacking.

“Are you kidding, carjacking? Do you see that I am eight months pregnant?” she responded before she was cuffed.

AI’S FACIAL RECOGNITION FAILURES: 3 TIMES CRIME-SOLVING INTELLIGENCE GOT IT WRONG

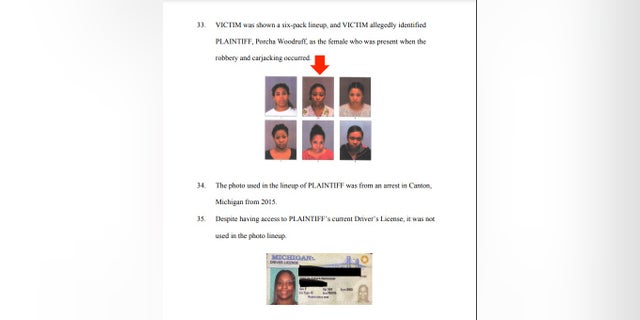

Woodruff, 32, who was living with her fiance and two kids, was implicated as a suspect through a photo lineup shown to the victim “following an unreliable facial recognition match,” the lawsuit says.

The photo used in the lineup was an 8-year-old mug shot from a 2015 arrest in Canton, Michigan, despite police having access to a more recent photo in her driver’s license, according to the lawsuit.

POLICE USED AI TO TRACK DRIVERS ON HIGHWAYS AS ATTORNEY QUESTIONS LEGALITY

The officers cuffed the pregnant mother in front of her kids.

“She was forced to tell her two children, who stood there crying, to go upstairs and wake Woodruff’s fiance to tell him, ‘Mommy is going to jail,'” the lawsuits says.

Her fiance and mother, who he called, tried to defend her to the police but to no avail.

Woodruff was led into the police vehicle, searched and handcuffed as neighbors and her family watched, the lawsuit says.

FOX NEWS DIGITAL EXCLUSIVE: CRUZ SHOOTS DOWN SCHUMER EFFORT TO REGULATE AI: ‘MORE HARM THAN GOOD’

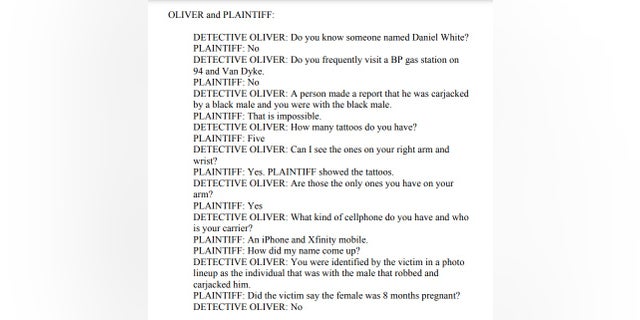

During questioning, police asked about her tattoos, if she knew a man that she didn’t know and what her cellphone carrier was, according to the legal action, which later showed none of the answers matched the description of the suspect for whom they were looking.

At one point, Woodruff asked the detective, “Did the victim say the female was 8 months pregnant?”

The officer responded, “No.”

She spent two more hours in a holding cell after the questioning until she was arraigned.

“She sat on a concrete bench for approximately 11 hours before being released since there were no beds or chairs available,” the lawsuit alleges.

BABY MONITOR HACKERS SOLD NUDE IMAGES OF KIDS ON SOCIAL MEDIA: REPORT

The stress stirred medical complications with the baby as well as dehydration, according to the lawsuit.

All this happened between 7:50 a.m. and 7 p.m. on Feb. 16. By March 7, the case was dismissed for “insufficient evidence.”

Woodruff sued the Detroit Police Department in federal court on Aug. 3.

The Detroit police did not respond to a request for comment from Fox News Digital.

Previous AI wrongful arrests in Detroit

Before the wrongful arrest of Woodruff, who is Black, Detroit police arrested two Black men named Robert Williams and Michael Oliver, which became highly publicized cases.

Both men were falsely accused by AI, which sparked a lawsuit from the American Civili Liberties Union.

There are other cases like this across the country, including the wrongful arrest of a Black Georgia woman for purse thefts in Louisiana.

AI’S FACIAL RECOGNITION FAILURES: 3 TIMES CRIME-SOLVING INTELLIGENCE GOT IT WRONG

“The need for reform and more accurate investigative methods by the Detroit Police has become evident, as we delve into the troubling implications of facial recognition technology in this case,” the lawsuit says.

Dozens of studies have been conducted in the U.S. alone over the last 10 years that seemingly back the lawsuit’s claims.

The U.S. National Institute of Standards and Technology’s (NIST) research in 2019, which was considered a landmark study at the time, is often cited in news articles and other research papers as a jumping-off point.

The study showed that African American and Asian faces were up to 100 times more likely to be misidentified than White faces. Native Americans were misidentified the most often.

ACLU FILES COMPLAINT OVER FACIAL RECOGNITION ARREST

Women’s darker skin tones are even more likely to be misidentified by AI-powered facial recognition, according to NIST.

Other academic research throughout the world, including in the United Kingdom and Canada, have seen similar disparities, according to a May report by Amnesty International.

Woodruff’s lawsuit demanded an undisclosed amount of money and repatriations.

Read the full article Here