‘Feel-good measure’: Google to require visible disclosure in political ads using AI for images and audio

Google is set to require political advertising that uses artificial intelligence to generate images or sounds come with a visible disclosure for users.

“AI-generated content should absolutely be disclosed in political advertisements. Not doing so leaves the American people open to misleading and predatory campaign ads,” Ziven Havens, the Policy Director at the Bull Moose Project, told Fox News Digital. “In the absence of government action, we support the creation of new rulemaking to handle the new frontier of technology before it becomes a major problem”

Havens’ comments come after Google revealed last week that it will start requiring the disclosure of the use of AI to alter images in political ads starting in November, a little more than a year before the 2024 election, according to a PBS report. The search giant will require that the disclosure attached to the ads be “clear and conspicuous” and also located in an area of the ad that users are likely to notice.

SERIES OF GOOFY MISTAKES BRINGS MAJOR NEWSPAPERS AI EXPERIMENT TO SCREECHING HALT

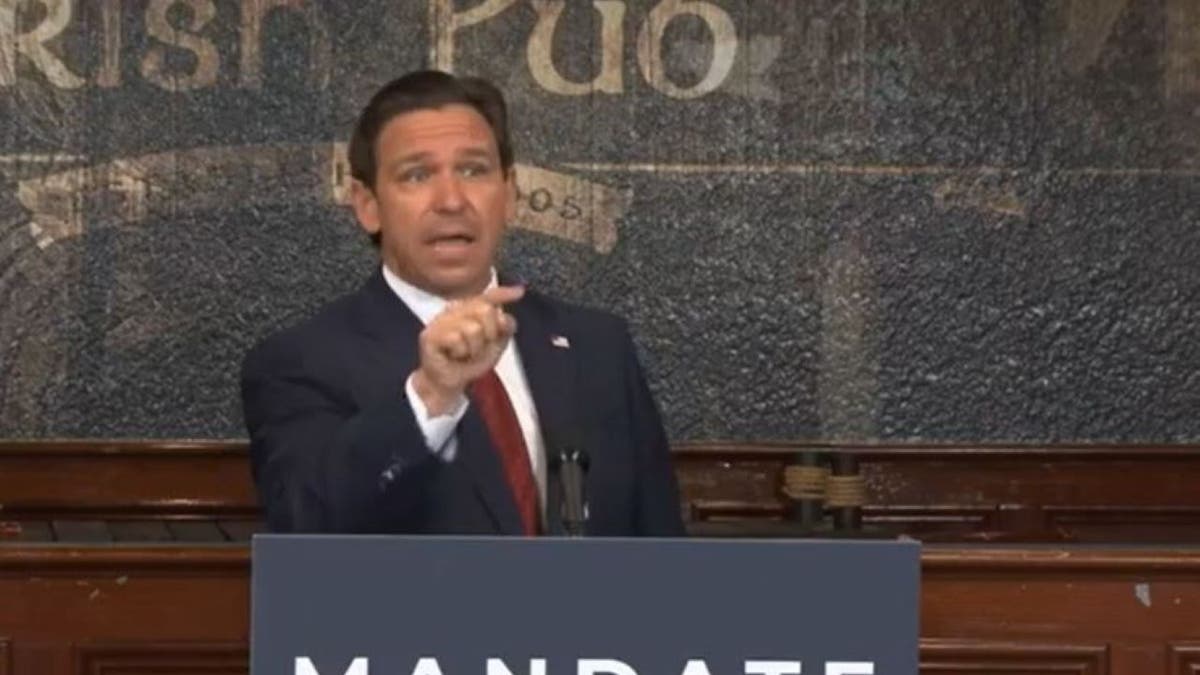

The move comes as political campaigns have begun increasing the use of AI technology in advertising this cycle, including ads by 2024 GOP hopeful Florida Gov. Ron DeSantis and the Republican National Committee.

In one June DeSantis ad that targeted former President Donald Trump, the campaign used realistic fake imagery that depicted the former president hugging Dr. Anthony Fauci. The ad took aim at Trump for failing to fire Fauci during the height of the pandemic, noting that the former president “became a household name” by firing people on television but failed to get rid of the controversial infectious disease expert.

A version of the ad posted on X, the social media platform formerly known as Twitter, contains reader-added context that warns viewers the ad “contains real imagery interspersed with AI-generated imagery of Trump hugging and kissing” Fauci.

Such warnings could now become commonplace in ads placed on Google, though some experts believe the labels are unlikely to make much of a difference.

LIBERAL MEDIA COMPANY’S AI-GENERATED ARTICLES ENRAGE, EMBARRASS STAFFERS : ‘F—ING DOGS–T’

“I think this is a feel-good measure that accomplishes nothing,” Christopher Alexander, the Chief Analytics Officer of Pioneer Development Group, told Fox News Digital. “An AI getting content wrong or a human deliberately lying? Unless you want to start prosecuting politicians for lying, this is like regulating Colt firearms t-shirts as a gun control measure. This sort of pandering and fearmongering about AI is irresponsible and just stifles innovation while accomplishing nothing useful.”

Last month, the Federal Election Commission (FEC) unveiled plans to regular AI-generated content in political ads ahead of the 2024 election, according to the PBS report, while lawmakers such as Senate Majority Leader Chuck Schumer, D-N.Y., have expressed interest in pushing legislation to create regulations for AI-generated content.

But Jonathan D. Askonas, an assistant professor of politics and a fellow at the Center for the Study of Statesmanship at The Catholic University of America, questioned how effective such rules would be.

WHAT IS AI?

“The real problem is that Google has such a monopolistic chokehold on the advertising industry that its diktats matter more than the FEC,” Askonas told Fox News Digital. “Some kind of disclaimer or labeling seems rather harmless and a kind of nothingburger. What matters more is that policies are applied without partisan bias. Big Tech’s track record is not encouraging.”

A spokesperson for Google told Fox News Digital that the new policy is an addition to the company’s efforts on election ad transparency, which in the past has included “paid for by” disclosures and a public library of ads that allows users to view more information.

“Given the growing prevalence of tools that produce synthetic content, we’re expanding our policies a step further to require advertisers to disclose when their election ads include material that’s been digitally altered or generated,” the spokesperson said. “This update builds on our existing transparency efforts — it’ll help further support responsible political advertising and provide voters with the information they need to make informed decisions.”

Phil Siegel, the founder of the Center for Advanced Preparedness and Threat Response Simulation, or CAPTRS, told Fox News Digital that the “intent” behind the new Google rule is good, but cautioned that how it will be implemented and just how valuable it will be for users remains a key question. He suggested that the FEC could add rules to the current “Stand by Your Ad provision” that would require candidates to disclose the use of AI-generated content.

‘For example, ’I approved this message, and it contained artificial intelligence-generated content,'” Siegel said.

Without that, Siegel said the value of Google’s rule is likely to be “minimal.”

“The value to the viewer of the disclosure seems minimal except perhaps in illustrative cases where someone might take it for hard truth.” he said. “It remains to be seen whether campaigns use the identifier to scale back the use or to use it even more to create even more misleading ads. They could do the latter and claim it was identified as AI-generated and so it’s ok.”

Read the full article Here