Nancy Mace previews House hearing on AI deepfakes

Rep. Nancy Mace, R-S.C., is calling for solutions to the wide array of dangers posed by online content falsified using Artificial Intelligence (AI) – known as “deepfakes.”

“These things are only going to become more prevalent if we don’t start discussing the problem and talking to AI experts on how to address deepfakes now and in the future,” Mace told Fox News Digital in a Friday interview.

She hopes to get those answers in next week’s hearing on AI deepfakes by the House Oversight’s Subcommittee on Cybersecurity, Information Technology, and Government Innovation – which Mace chairs.

Mace said she hopes the expert witnesses at the Wednesday hearing will “share some of the more egregious examples” of AI deepfakes being used, like the prevalence of obscene AI generated images and video.

EXPERTS DETAIL HOW AMERICA CAN WIN THE RACE AGAINST CHINA FOR MILITARY TECH SUPREMACY

“Ninety percent of AI deepfakes are pornographic in nature,” Mace said, listing off the dangers of AI-faked content. “There is a significant amount of child pornographic material… being generated, there’s revenge porn.

“And you think about the election next year, and what deepfakes might be generated to come out to sway an election. We see propaganda by deepfakes, and in different countries in different wars”

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

An image of what appeared to be an explosion at the Pentagon, which was later suggested to likely be generated by AI, caused a brief panic and even a temporary dip in financial markets.

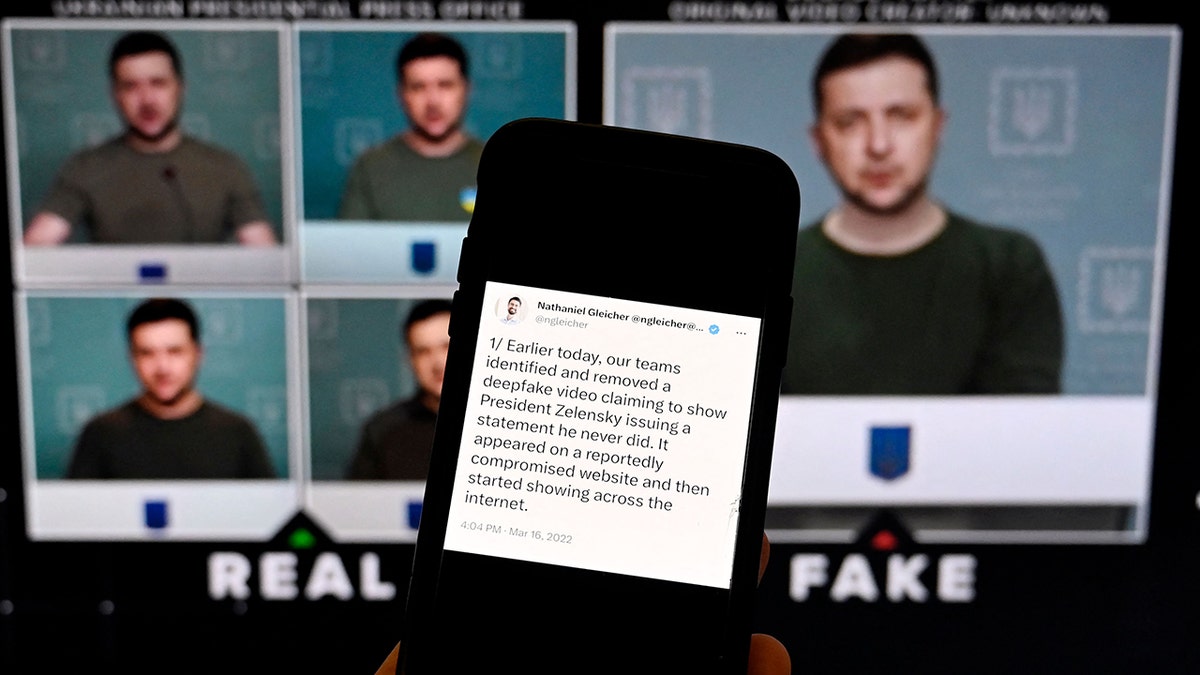

And last year, a deepfake video of Ukrainian President Volodymyr Zelenskyy appearing to yield to Russia’s demands was swiftly detected – but sparked fears about a more sophisticated attempt sometime in the future.

NOT OUR NATION’S JOB TO KEEP ALLIES ON ‘CUTTING EDGE’ OF AI DEVELOPMENT, FORMER CIA CHIEF SAYS

Mace said she plans to ask “how it might affect everyday Americans or elections in the future” and how deepfakes can be better detected, among other issues.

“What are some of the solutions? Is it disclosing the original video or having some kind of a label that this is AI generated material? You know, disclosures are really good for transparency…labeling might be a way to address it,” she explained.

“But also, we have to make sure we have the technology to be able to detect this is fake, you know, and is it going to get so advanced, that we won’t be able to detect that it’s fake material?”

Read the full article Here